SCOTUS Remands Content Moderation Cases But Still Delivers First Amendment Lessons

In Moody v. NetChoice and NetChoice v. Paxton, 603 U.S. ____ (2024), the U.S. Supreme Court confirmed that social-media platforms have First Amendment interests in exercising editorial discretion over the third-party content. However, the Court remanded the cases back to the lower courts after concluding that neither court properly analyzed “the facial First Amendment challenges” to the laws.

Facts of the Case

In 2021, Florida and Texas enacted statutes regulating large social-media companies and other internet platforms. The States’ laws differ in the entities they cover and the activities they limit; however, both restrict the platforms’ capacity to engage in content moderation—to filter, prioritize, and label the varied third-party messages, videos, and other content their users wish to post. Both laws also include individualized-explanation provisions, requiring a platform to give reasons to a user if it removes or alters her posts.

NetChoice LLC and the Computer & Communications Industry Association (collectively, NetChoice)—trade associations whose members include Facebook and YouTube—brought facial First Amendment challenges against the two laws. District courts in both States entered preliminary injunctions. The Eleventh Circuit Court of Appeals largely upheld the injunction of Florida’s law, holding that the State’s restrictions on content moderation trigger First Amendment scrutiny under the Supreme Court’s cases protecting “editorial discretion.” It further concluded that the content-moderation provisions are unlikely to survive heightened scrutiny.

The Eleventh Circuit similarly found that the statute’s individualized-explanation requirements were likely to fall. Citing Zauderer v. Office of Disciplinary Counsel of Supreme Court of Ohio, 471 U.S. 626 (1985), the court held that the obligation to explain “millions of [decisions] per day” is “unduly burdensome and likely to chill platforms’ protected speech.”

The Fifth Circuit Court of Appeals reached the opposite conclusion, reversing the preliminary injunction of the Texas law. According to the Fifth Circuit, the platforms’ content-moderation activities are “not speech” at all, and so do not implicate the First Amendment. Even if those activities were expressive, the court determined the State could regulate them to advance its interest in “protecting a diversity of ideas.” The Fifth Circuit further held that the statute’s individualized-explanation provisions would likely survive, even assuming the platforms were engaged in speech. It found no undue burden under Zauderer because the platforms needed only to “scale up” a “complaint-and-appeal process” they already used.

Supreme Court’s Decision

By a vote of 6-3, the Supreme Court vacated the judgments after determining that neither the Eleventh Circuit nor the Fifth Circuit conducted a proper analysis of the facial First Amendment challenges to Florida and Texas laws.

In reaching its decision, the Court emphasized that facial challenges are difficult to win because a plaintiff must show that “a substantial number of [the law’s] applications are unconstitutional, judged in relation to the statute’s plainly legitimate sweep.” The Court went on to find that the lower courts had failed to adequately address the full range of activities the laws cover, and measure the constitutional against the unconstitutional applications. “In short, they treated these cases more like as-applied claims than like facial ones,” Justice Elena Kagan wrote.

According to the Court, the first step in the proper facial analysis is to assess the state laws’ scope. “What activities, by what actors, do the laws prohibit or otherwise regulate? The laws of course differ one from the other. But both, at least on their face, appear to apply beyond Facebook’s News Feed and its ilk,” Justice Kagan wrote. She went on to explain that the next order of business is to decide which of the laws’ applications violate the First Amendment, and to measure them against the rest. “For the content-moderation provisions, that means asking, as to every covered platform or function, whether there is an intrusion on protected editorial discretion,” she wrote. “And for the individualized-explanation provisions, it means asking, again as to each thing covered, whether the required disclosures unduly burden expression.”

While the Court acknowledged that it is now up to the lower courts to consider the scope of the laws’ applications and weigh the unconstitutional as against the constitutional ones, it offered additional guidance about how the First Amendment relates to the laws’ content-moderation provisions.

The Court first emphasized that the First Amendment offers protection when an entity engaged in compiling and curating others’ speech into an expressive product of its own is directed to accommodate messages it would prefer to exclude. Moreover, none of that changes just because a compiler includes most items and excludes just a few.

“When the platforms use their Standards and Guidelines to decide which third-party content those feeds will display, or how the display will be ordered and organized, they are making expressive choices,” Justice Kagan wrote. “And because that is true, they receive First Amendment protection.”

The Court also cited that its precedents confirm that the government can’t get its way just by asserting an interest in better balancing the marketplace of ideas. “However imperfect the private marketplace of ideas, here was a worse proposal — the government itself deciding when speech was imbalanced, and then coercing speakers to provide more of some views or less of others,” Justice Kagan wrote.

Based on these First Amendment principles, Justice Kagan strongly suggested that the Fifth Circuit erred and that Texas is unlikely to succeed in enforcing its law against the platforms’ application of their content-moderation policies to their main feeds. “Contrary to what the Fifth Circuit thought, the current record indicates that the Texas law does regulate speech when applied in the way the parties focused on below — when applied, that is, to prevent Facebook (or YouTube) from using its content-moderation standards to remove, alter, organize, prioritize, or disclaim posts in its News Feed (or homepage),” Kagan wrote. “The law then prevents exactly the kind of editorial judgments this Court has previously held to receive First Amendment protection.”

Previous Articles

Supreme Court Rejects Moment of Threat Doctrine in Deadly Force Case

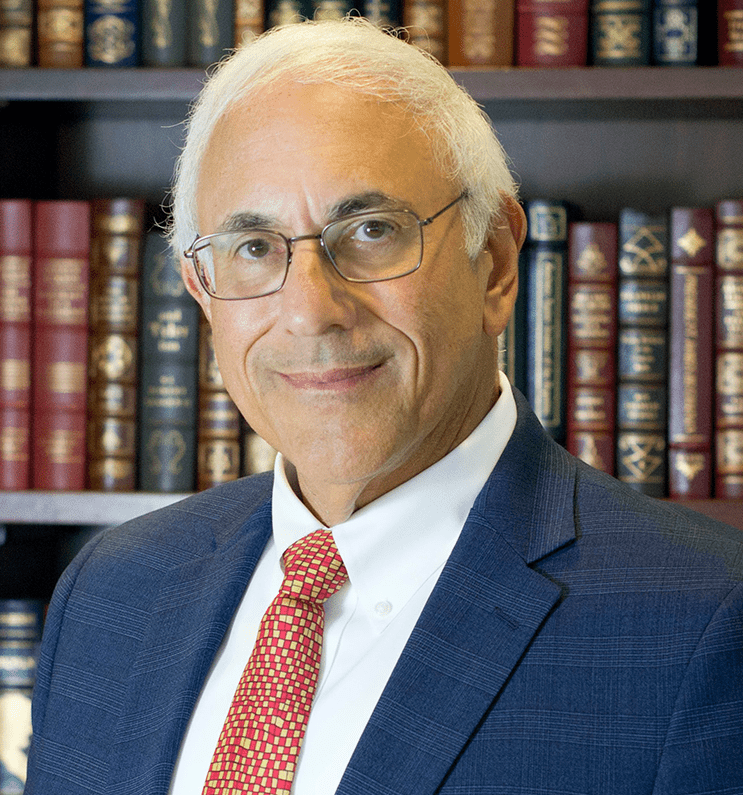

by DONALD SCARINCI on June 30, 2025

In Barnes v. Felix, 605 U.S. ____ (2025), the U.S. Supreme Court rejected the Fifth Circuit Court o...

SCOTUS Holds Wire Fraud Statute Doesn’t Require Proof Victim Suffered Economic Loss

by DONALD SCARINCI on June 24, 2025

In Kousisis v. United States, 605 U.S. ____ (2025), the U.S. Supreme Court held that a defendant wh...

SCOTUS Holds Wire Fraud Statute Doesn’t Require Proof Victim Suffered Economic Loss

by DONALD SCARINCI on June 17, 2025

In Kousisis v. United States, 605 U.S. ____ (2025), the U.S. Supreme Court held that a defendant wh...

The Amendments

-

Amendment1

- Establishment ClauseFree Exercise Clause

- Freedom of Speech

- Freedoms of Press

- Freedom of Assembly, and Petitition

-

Amendment2

- The Right to Bear Arms

-

Amendment4

- Unreasonable Searches and Seizures

-

Amendment5

- Due Process

- Eminent Domain

- Rights of Criminal Defendants

Preamble to the Bill of Rights

Congress of the United States begun and held at the City of New-York, on Wednesday the fourth of March, one thousand seven hundred and eighty nine.

THE Conventions of a number of the States, having at the time of their adopting the Constitution, expressed a desire, in order to prevent misconstruction or abuse of its powers, that further declaratory and restrictive clauses should be added: And as extending the ground of public confidence in the Government, will best ensure the beneficent ends of its institution.